Moderation Actions

Confused about "Moderation Actions"?

Let us know how we can improve our documentation:

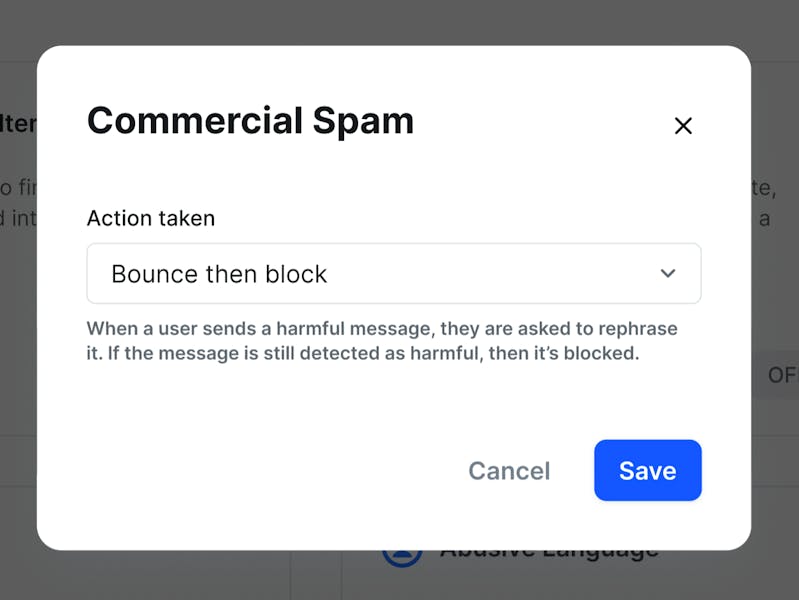

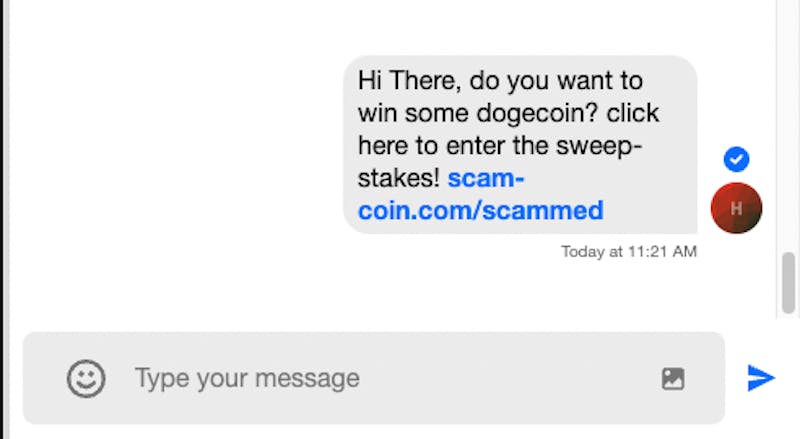

Stream AutoMod introduces the possibility to implement behavioural nudges that can correct user behaviour. If a message is classified as harmful by our AI model, the end user is informed and is given the possibility to edit it before it is sent to others in the channel. This reduces the number of harms originating from good actors in chat because it gives the users possibility to remove the harm before it reaches other people. It also makes it harder for bad actors to send harmful messages. Finally, it reduces the workload for moderators, as a significant portion of harmful messages will be corrected by the sending users themselves.

Below is a list of actions that are configurable from the AutoMod dashboard.

Configurable actions

Copied!Confused about "Configurable actions"?

Let us know how we can improve our documentation:

We can assign an action to each harm engine to be performed when a harmful message is detected.

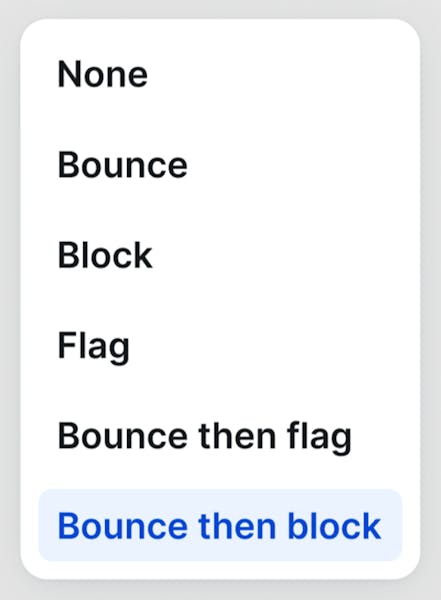

None The harm engine is disabled and no message will be checked.

![None: Engine not active flow]()

Infinite Bounce When a user sends a harmful message, they are asked to rephrase it until the message is clear.

![Infinite Bounce flow]()

Infinite Bounce Infinite Bounce can be used to provide a prompt on the front-end to the sending user each time they attempt to send an offending message.

Flag When a user sends a harmful message, it is sent to the channel and flagged in the moderation dashboard.

![Flagging flow]()

Flag Flag can be used when you do not want to inform the user that their message has been detected, or when you do not want to disrupt the user flow at all.

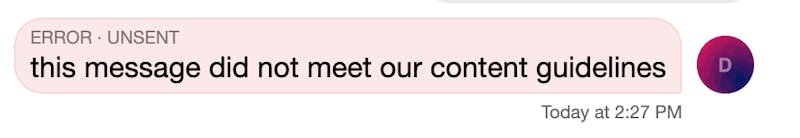

Block When a user sends a harmful message, it is blocked and not shown in the channel. It will be shown in the moderation dashboard.

![Blocking flow]()

Block Block can be used when you want to prevent the sending of a message entirely, in a way that is visible to the sending user. This can also be exposed to other users in the channel.

Bounce then flag When a user sends a harmful message, they are asked to rephrase it. If the message is still detected as harmful, then it is sent and flagged in the moderation dashboard.

![Bounce then Flag flow]()

Bounce then Flag Bounce then flag can be used to implement behavioural nudges, encouraging users to reconsider their message before it is sent to other users.

Bounce then block When a user sends a harmful message, they are asked to rephrase it. If the message is still detected as harmful, then it is blocked and no more edit is allowed.

![Bounce then Block flow]()

Bounce then Block

Bounce then block can be used as a more forceful form of Bounce then flag, preserving the message in the ‘blocked messages’ view in the moderation dashboard for review, but not sending it to the other users in the channel.

Details

Copied!Confused about "Details"?

Let us know how we can improve our documentation:

Flag

Copied!Confused about "Flag"?

Let us know how we can improve our documentation:

This is the less intrusive way to provide moderation. If the message is considered harmful, the response from Chat API does not trigger any error. The message is shown to other users and stored in the database. It will be shown in the dashboard message inbox for the moderator to review

Block

Copied!Confused about "Block"?

Let us know how we can improve our documentation:

This is the stricter way to provide moderation. If the message is considered harmful, the response from Chat API will trigger an error. The message is shown to other users and stored in the database. It will be shown in the dashboard message inbox for the moderator to review

Bounce

Copied!Confused about "Bounce"?

Let us know how we can improve our documentation:

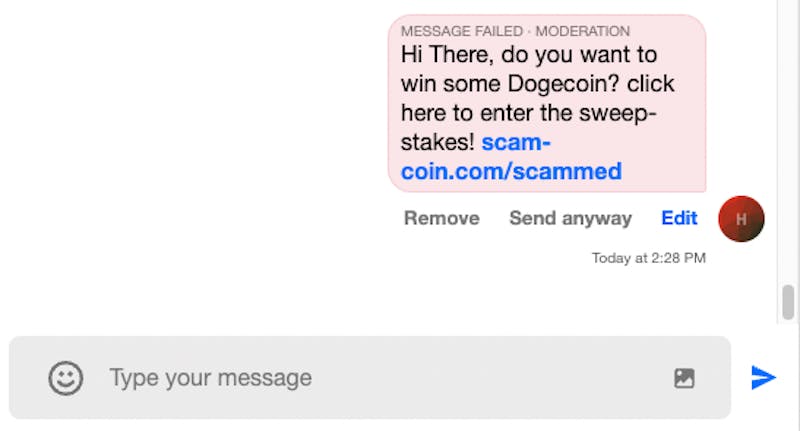

When a user sends a message and our AI models consider it harmful, it is bounced back to the user. When a user sends a message and it is considered harmful, Chat API returns a message with type=error with moderation details on what model triggered the harm.

The message is bounced back. The user is informed that they violated the platform guidelines and, if frontend SDKs are present, is invited to rephrase what they just wrote. The user can decide to delete it, send it anyway or modify it. These options can be changed in implementation in your client app. Without frontend SDKs, the user sees the message as blocked with some information on why it was blocked. Until the user takes action, the message is not stored on Stream’s servers and it is not shown in the dashboard.

The options are as follows:

delete: the message disappears from the chat (does not give a new API response)

modify: a new message will be sent for evaluation and will be bounced again if it's still harmful

send anyway: if the message text does not change, it will:

Only bounce: be bounced back againBounce then flag: be flagged. If the same user tries to send the same message in the channel within a short time, it will be flagged again.Bounce then block: be blocked, not allowing to edit anymore. If the same user tries to send the same message in the channel within a short time, it will be blocked again.

Sequence diagram for flag and block:

Note: The delete/edit message does not give any new API response. The first removes the message from the message list for the sending user, and the second sends a new message.